Edit: there is now a published paper based on this blog post. Published version here; draft here

On Hardware, there’s a great illustrated history of computers here (and timeline). For econometrics software, I relied on Charles Renfro’s massive history. I have tried to classify the various ways in which computerization affected the development of economics here and I list further research questions here. Comments welcome.

1930s

Leontief tries to solve 44 simultaneous equations on a mechanical computer developed by John Wilbur at MIT and fails. The computing capacities at that time, Dorfman recalls, enabled econs to solve 9 simultaneous equations in 3/4 hours.

Late 1940s

While the first general-purpose computer, the Electronic Numerical Integrator and Computer (ENIAC) becomes operational in 1946, economists’ fascination with machine is then still related to the utilization of physics metaphors and tools to build economic models. In 1949, for instance, engineers Walter Newlyn and Bill Phillips (of the Phillips curve) built an hydromechanical analogue computer to simulate monetary and national income flows, the MONIAC.

Phillips’s MONIAC

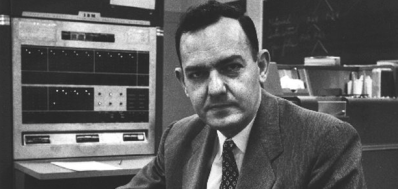

Less known are the analogue electrical-mechanical devices Guy Orcutt built during the 1940s to compute solutions to complex duopoly and spatial location problems, and his regression analyzer. In these years, statistical research was implemented through operating desk-top calculators and punch-card tabulators. Typical issues such as Stigler’s diet problem (finding the minimum cost of a diet involving 9 dietary elements and 77 foods while meeting nutritional standards) took 120 man-days to compute by hand.

Orcutt’s regression analyzer

1947-1948

(Techniques): At Los Alamos, Von Neumann and alii wrote down the first stored program. The program is used to run Monte Carlo simulations on an ENIAC, with the purpose of modeling nuclear fission.

(Hardware,techniques) Leontief created HERP to work on Harvard Mark II. He and Mitchell solved a input-output system of 38 equations and 38 unknowns, and obtained special solutions for 98 equations systems. Took 59, 5 hours of uninterrupted machine time+ another 60 for set-up, tape-checking, trouble-shooting. Leontief was optimistic that the job of planning war mobilization could be mechanized

(Hardware,techniques) Leontief created HERP to work on Harvard Mark II. He and Mitchell solved a input-output system of 38 equations and 38 unknowns, and obtained special solutions for 98 equations systems. Took 59, 5 hours of uninterrupted machine time+ another 60 for set-up, tape-checking, trouble-shooting. Leontief was optimistic that the job of planning war mobilization could be mechanized

1949

(Hardware) First ESDAC (Electronic Delayed Storage Automatic Calculator) at Cambridge. At Harvard around that time, Leontief use Mark III_IV magnetic drum computer to do computation for input-output analysis models.

(Techniques) Orcutt and graduate student Cochrane (DAE-Cambridge) work on the application of least squares to times series and provide a two-steps estimation procedure to handle autocorrelated processes. In his exploration of the consequence of autocorrelation estimates, Orcutt used Monte-Carlo simulation methods

Early 1950s

Cambridge: econ computation still largely done on Marchant electric calculators, takes days.

Cambridge: econ computation still largely done on Marchant electric calculators, takes days.

Use of Dolittle method (see Goldberger’s recollections about computing by hand).

1950-1951

(techniques/modeling) Cowles Monograph 10, in which Koopmans and alii lay out the principles of structural econometrics. Introduces Limited Information Maximum Likelihood (work by Anderson and Rubin), which trades off some efficiency of the Full info ML method for lighter computational burden. Computation remain heavy. Most economists stick to least-square methods. Formalization of the nature of the identification problem, and restriction needed to identify systems of simultaneous equations.

Development of tests: Anderson and Rubin propose identifications tests. Cowles give minor role to tests, but t-statistics widely used nonetheless. Development of goodness-of-fit tests by Marshall and Christ (1951) (to test Klein’s macroeconometric equations, most of which fail). At DAE, Durbin and Watson develop test for first-order residual correlation, Davis (1952) works on F-statistics

1953:

Lucy Slater computes econ data for Alan Brown from the Dpt of Applied Econ at Cambridge (takes minutes)

(techniques) Henri Theil develops Two-stage least squares in which he uses the second moment matrice resulting from applying LS to reduced form.

1954

(hardware) Klein and Goldberger (Michigan) shift from desk top calcutators to electronic computer to estimate moments (Renfro)

(Techniques/Modeling): Cowles Monograph 14

1956

(Hardware/software/modeling) Iowa State U install an IBM 650. Hartley and Gosvernor develop a linear programming software, used by agricultural economists team (Heady). Inversion of 98 row matrix (up to 198 rows in 1959)

1955

Carnegie: Simon implements bounded decision on a JOHNNIAC. Writes down program Logic Theorist (check?)

1957

Orcutt (MIT), “A new type of socio-economic System”: introduces microsimulation. Orcutt had a background in physics, had used Monte Carlo simulation methods in the wake of Von Neumann. Large number of micro-units which don’t interact but transition to one state to another across time. Orcutt wanted to understand the resulting dynamics by aggregating these micro-units:

If samples of, say, 10,000 to 100,000 entities could be handled in a computer, a highly useful representation could be given of the evolving state of a theoretical population involving hundreds of millions of units. This idea, together with the large scale, high speed computers which were just beginning to become available, convinced me that it would be possible to solve microanalytic models involving recursive interaction through markets of very large populations of microentities. Actually implementing this was facilitated enormously by the creation around 1956 of the Computation Center for the New England Colleges and Universities, financed by I.B.M. and furnished with an early IBM 704

1958

(Modeling/techniques) Forrester (had studied Wiener’s cybernetics at MIT servomechanism lab) publishes Industrial Dynamics. Birth of “system dynamics,” special type of simulation of the many parts of a system without interaction designed to predict its evolution (book in 1961, case of General Electrics)

(Techniques): Sargan formalizes the use of Instrumental Variables

1959

(Institution) Launch of the Social Systems Research Institute at Wisconsin by Orcutt

Early 1960s

(Hardware) Mainframe computers begin to be used widely, because of technical advances: transistorized computer generalizes; cooling and storage (RAM) improve. These computers become more reliable and affordable for institutions. Beginning of the era when one could estimate an equations’ parameters in less than 1 hour.

(Software) In that period, software is essentially the outgrowth of local needs for specific research projects. At Cambridge DAE, Mike Farell and Lucy Slater write down REG I, first regression program for ESDAC to estimate coal mining data. Development of econometric software package such as MODLER (for the Brookings model).

(Models) Use of quadratic loss functions by Holt, Modiglinai, Muth and Simon for production scheduling and inventory control, then macro planning (Theil 1964) to generate computationally feasible solutions to economic models.

1960

Brown and Stone (DAE, Cambridge) launch the Growth project

(Modeling) Leif Johansen publishes A Multisectoral Study of Economic Growth, usually seen as a foundational model for Computational General Equilibrium

(Theory) Hurwicz “Optimality and Informational Efficiency in Resource Allocation Processes” (first mechanism design questions)

(Institutions) Foundation of Hoggatt’s Berkeley computer laboratory to run experiment. Failed to produce any noteworthy experiment

1962

MIT dpt of economics and business school had 450 hours of computer time per month to allocate

Penn macroeconomists (Klein) worked with an Univac I/II. Mehgnad Desai spent the whole summer extracting the latent roots of a symmetric matrix for a 6 variables Principal Component Analysis using a Monroe calculator

1965

(Hardware) At MIT, econ & business dpts buy an IBM1620. At Cambridge (DAE): replacement of ESDAC2 by Titan; increased used of FORTRAN

1966

(Modeling/techniques) Von Neumann’s posthumous The Theory of Self-Reproducing Automata:

“the heuristic use of computers is similar to and may be combined with the traditional hypothetical-deductive-experimental method of science. In that method one makes a hypothesis on the basis of the available information and derives consequences from it by means of mathematics, test the consequence experimentally, and forms a new hypothesis o the basis of the findings. This sequence is iterated indefinitely. In using a computer heuristically one proceeds in the same way, with computation replacing or augmenting experimentation.”

1967

It could take literally weeks to run simulation for large maroeconometrics models (Ando to Modigliani on the FRB model):

1968

(data): beginnings of the Panel Survey on Income Dynamics (PSID)

1969

(Data; institutions) development of online database. Foundation of Data Resources Inc by Otto Eckstein; of Chases Econometrics by Michael Evans, and of Wharton Econometrics Forecasting by Lawrence Klein (hosted by the Economic Research Unit at Penn). The idea of selling access to large scale databases, forecasts, and time-sharing computing services was made possible by the development of mainframe-based dial up.

(Models/ Techniques) “Urban dynamics”: urban system dynamics by Forrester. Concludes that then-popular low-cost housing programs are harmful. Generates a lot of debates

(Models) Schelling, “Dynamic Models of Segregation”: usually seen as the first explicit effort to apply Von Neumann’s theory of automata to economics. Large number of agents with limited characteristics interacting with each other according to limited sets of rule. Schelling shows evenly distributed characteristics results in spatial clustering of agents.

(Models) Schelling, “Dynamic Models of Segregation”: usually seen as the first explicit effort to apply Von Neumann’s theory of automata to economics. Large number of agents with limited characteristics interacting with each other according to limited sets of rule. Schelling shows evenly distributed characteristics results in spatial clustering of agents.

Late 1960s

(Data) McCracken moves his DATABANK system to the Economic Council of Canada and tries to integrate model building, maintenance and use process in his software. The Canadian experience serves as a model to rethink data policies in the US. The establishment of Federal economic data centers is recommended by the Ruggles Committee (1965), the Dunn report and the Kaysen task force (1966).

(Software) At LSE, Sargan writes Autocode to help programming on Atlas computer. Programs written by Durbin, Sargan, Wickens, Hendry; AUTOREG software library; many tests for model specification – the type of program developed begin to distinctively embody the LSE approach. At MIT, then Berkeley, Robert Hall, aided by Ray Fair, Robert Gordon, Charles Bischoff and Richard Sutch develops TSP, while Ed Kuh and Mark Eisner develops TROLL. Overall, in program development, the stress was place on devising algorithms to enable parameters estimators, to the expense of data management support or human interface. The main constraint was neither limited speed or core memory, but storage space.

(Techniques) Replacement of Newton with Gauss-Seidel algorithm, enabling more robust model solutions programs (Renfro). According to Lawrence Klein, its success owed as much to the efficiency of the algorithm itself as to the associated possibility to produce standardized graphs and tables readily usable by government agencies.

Early 1970s

(software) DRI develops EPS software; development of large statistical softwares (SAS/SPSS)

(theory) development of mechanism design (Hurwicz 72 “On Informationally Decentralized Systems”, Maskin’s thesis on Nash, Myerson)

(technique/modeling) Orcutt develops DYNASIM, a dynamic microsimulation of income model at the Urban Institute

1972

Meadows Report: uses dynamics systems simulation to argue that our model of growth is unsustainable. Model accused of being to sensitive to ad hoc parameters specification

(Software) Eisner and Kuhn (MIT) release first version of TROLL (Timeshared Reactive OnLine Laboratory). They have been working on the project since 1968. Zellner begins to work on BRAP to develop computational foundations for Bayesian analysis.

Creation of Annals of Economic and Social Measurement under the auspices of John Meyer at the NBER (exact year?)

1973

(Modeling/techniques) Herbert Scarf, The Computation of Economic Equilibria. Shoven and Whalley, “General Equilibrium with Taxes: A Computational Procedure and an Existence Proof,” Review of Economics and Statistics: idea is to provide numerical estimates of efficiency and distributional effects within the same framework

1974

Alker: “Computer Simulations: Inelegant Mathematics and Worse Social Science.” simulation is “poor science,” models never fully tested empirically

1976

Arlington Williams writes the first electronic double auction program for PLATO in tutor language

Late 1970s, early 1980s:

(Data) Increase of panel data production: National income Longitudinal Survey (1979?); Survey of Income and Program Participations begins in 1983. Tax data becomes publicly available in the UK in 77 or so (interview).

(Techniques) Development of Automated Theorem Proving is some branches of mathematics at Argonne, Chicago

(Hardware) gradual replacement of mainframe computers with PCs

(Software) Chase develops XSIM, a integrated and user-friendly commercial adaptation of TROLL. Various software reflect diverging approaches to econometrics: at LSE, AUTOREG evolves into Hendry’s Give, reflecting the influence of General-to-Specific methodology. TSP develops as a broad package, more focused on estimation. It integrates development in non-linear estimation methods. Development of special-purpose software (BRATS, SHAZAM, Autobox). Need to enable spectral analysis, then ARIMA and VAR

1980

(Techniques/modeling) Sims outlines the VAR program in “Macroeconomics and Reality,” Econometrica

1981

(hardware) Introduction of the IBM PC; diskette storage; programming languages included Assembler, Basic, Fortran, Pascal

Switch to IBM PC immediate in the Computable General Equilibrium community (interview). Simulation computation time goes from 6 hours around 78 to minutes in 81

1982

(Techniques) Kydland and Prescott (Minnesota), birth of calibration in macroeconomics

(Techniques) Engle introduces Autoregressive Conditional Heteroscedasticity processes.

Rassenti-Smith: first computer-assisted combinatorial auction market : “lab experiments become the means by which heretofore unimaginable markets designs could be performance tested” (Smith 2001)

1983

(Hardware) IBM PC XT, 10MB Harddrive support large-scale computing

1984

(Institution) Foundation of the Santa Fe institute, Georges Cowan (physical chemists) becomes first president

1985

(Institution/hardware/software) Vernon Smith set up his Economic Science Lab to run experiments at Arizona. Used PLATO (Programmed Logic for Automated Teaching Operation), a programmed learning system to enable interactivity (for trading and auction experiments). More than 40 software applications were developed

1987

(Modeling) First Santa Fe conference on “the economy as an evolving, complex system”: 10 econ gathered by Arrow and 10 bio and computer scientists. Economists defend their axiomatic approach, according to Colander.

(Institutions, Harware, Software) Plott opens his Caltech EPPS Lab with 20 IBM PS/2 networked and MUDA (Multiple Unit Double Auction) IBM DOS software developed by Lee. Supported several types of market organization. MUDA was quickly adopted by many other universities.

1988

(Modeling/techniques) Ostrom, “Computer Simulation: the Third Symbol System”: usually considered the paper whereby simulation emancipated from being a mere reformulation of mathematically-written models to become a distinct modeling practice with a distinct language.

(Institution) Creation of Computer Science in Economics and Management journal (later Computational Economics)

Late 1980s

(Software) Beginning in 1985, most mainframe software become available for PCs; introduction of microcomputer compilers. Some mainframe software cease to be maintained (EPS, TROLL and XSIM) à larger market for software. Proliferation of test statistics; addition of time-series modules. New software developed for DOS (Stata intended to propose data management, graphical analysis, time series, cross section, panel econometrics)

Early 1990s

(Software) Eviews, BACC by Geweke and McCausland for Bayesian analysis, WinSolve for RBC. DOS to Windows conversion usually involve command to interface evolution. Along with all-purpose statistics software and econometrics package, a third type of package based on high level programming and matrix language develops, giving more flexibility to program new econometrics designs (Gauss, Ox, Matlab, R)

1991

(modeling) McCabe, Rassenti, “Smart Computer-Assisted Markets,” Science. Experimental study of auction markets designed for interdependent network industries. Lab experiments used to study “feasibility, limitations, incentives and performance of proposed market designs for deregulation, providing motivation for new theory”

1993

(modeling) Gode-Sunder compare experimental outcome of double auction (Smith) with human vs random-number generators, called Zero-Intelligence agents à similar results

1994

Deng and Papadimitriou “On the Complexity of cooperative solutions concepts” (Seeds of algorithmic game theory)

1995

(institutions) Foundation of the Society for Computational Economics

1996

(Modeling) Santa Fe: Second workshop (papers published in 1997 under the editorship of Arthur, Durlauf and Lane).

(Modeling) Epstein and Axtell Growing Artificial Societies: Social Science from the Bottom Up. Manifesto for Agent Based Modeling (Sugarscape,, introduction of learning behavior)

1998

(Techniques) Kenneth Judd Numerical Methods for Economists

2000s

(Software): development of Dynare to run, estimate and simulate DGSE models

2001

(Techniques) Nissan & Ronen “Algorithmic Mechanism Design” in Games

2006

Publication of the papers taken from Santa Fe 3rd workshop under editorship of Blume and Durlauf. Idea that mainstream economics are ripe to integrate some complexity ideas. Lack of consensus on the direction Santa Fe research was heading to?

2007

Foundation of the Argonne-University of Chicago ICE (Institute on Computational Economics) : dynamic programming, stochastic modeling, structural estimation and optimization problems with equilibrium constraints

2008

(Modeling/ theory) Daskalakis, Goldberg, Papadimitriou “The Complexity of Computing a Nash Equilibrium” proves that computing the Nash equilibrium for a 3-person games is computationally intractable; also analysis of sponsored search by Edelman, Varian, Mehta et alii

2009

Farmer-Foley Nature Manifesto: “The Economy needs agent-based modeling”

2010s:

using machine learning to adapt econometric techniques to big data challenges?

Reblogged this on MCE Blog.